My two most important digital humanities projects are the Distance Machine, a web application that identifies instances where a historical text is using a word that has not yet come into common use, and the Networked Corpus, which visualizes statistical topic models of texts. My articles on these projects can be found on my publications page.

I am currently working on PromptArray, a system for designing input text for large language models. PromptArray allows you to combine multiple prompts using operators such as and, or, and not through a syntax that unites aspects of both computer code and natural language.

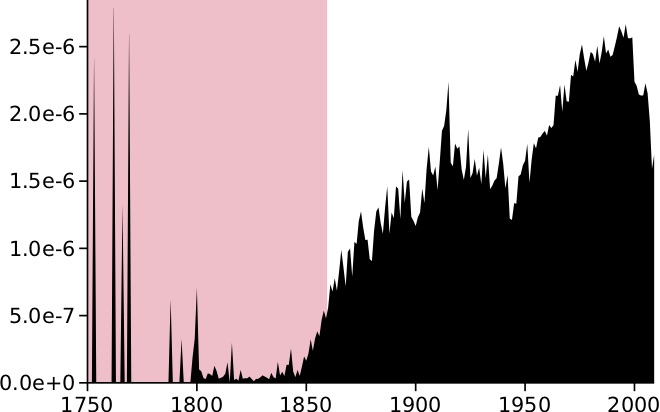

I am the developer and maintainer of the “bursts” package for the R statistical computing environment. This package implements Jon Kleinberg’s burst detection algorithm, which can be used to detect bursts of activity in streams of temporal data.

I have done a number of experiments with algorithmically generated text. The Coleridge Bot is an algorithmic poetry generator that creates nonsense verse in rhymed meter. The Depoeticizer alters a text to make it less poetic—that is, more in line with the expectations created by a statistical language model. My most recent project in this vein is A Hundred Visions and Revisions, which that uses a neural language model to rewrite poems to be about different topics while maintaining rhyme and meter.

I have also researched the history of algorithmic poetry, which goes back further than one might think. For a presentation I gave at Columbia University, I created a JavaScript-based simulation of a poetry generator from all the way back in 1677 titled Artificial Versifying.

I have also participated three times in Darius Kazemi’s National Novel Generating Month, which challenges people to write a computer program that produces a 50,000-word novel. My 2014 entry, The Story of John, compiles all the sentences mentioning the name “John” in a corpus of American fiction to create a single novel about a character called John. For the 2015 competition, I wrote a program called the Synonymizer that replaces every word in a text with a random synonym. I used this program to turn Henry James’s novel The Portrait of a Lady into The Portrayal of a Ma’am. In 2018, I created a neural network-based AI that attempts to determine what order sentences should appear in; I used it to reconstruct each chapter from Herman Melville’s novel Moby-Dick from its component sentences, producing Mboy-Dcki.

In a moment of lockdown-induced boredom in mid-2020, I created an inverted clock where the hours move faster than the minutes.

Back in 2003, I created Homespring, an absurdist programming language based on the metaphor of data as fish swimming in rivers. Since then, a few other enthusiasts of esoteric programming languages have created their own versions. I have gathered links related to Homespring here.

The source code for most of these projects is available on my GitHub page.